DIGITAL RESPONSIBILITY GOALS

The Digital Responsibility Goals (DRGs) is a framework of guiding principles for a digital ecosystem that prioritises human identity, social cohesion, ethical design and trust. In the DRG4FOOD project this framework serves as a frame of reference as well as a tool to guide the development of responsible solutions for a data- driven food system.

Apart from promoting the general vision of a better digital economy, the DRGs are introduced to strive for three additional impacts:

- pre-empting negative effects of digitalisation by promoting digital responsibility from design to release;

- positioning responsible technology as a viable business model;

- increasing adoption of digital technology for the benefit of citizens.

DIGITAL RESPONSIBILITY GOALS

To ensure a systematic approach for the implementation of the DRGs into the digital food ecosystem, the DRGs address different dimensions of digital technology that need to be improved in order to build a more trustworthy and responsible digital world. To embed digital responsibility from day one into solutions and tools, DRG4FOOD toolbox lists technology enablers recognised as supporting one or more Digital Responsibility Goal.

HOW DO THE DRG'S WORK ?

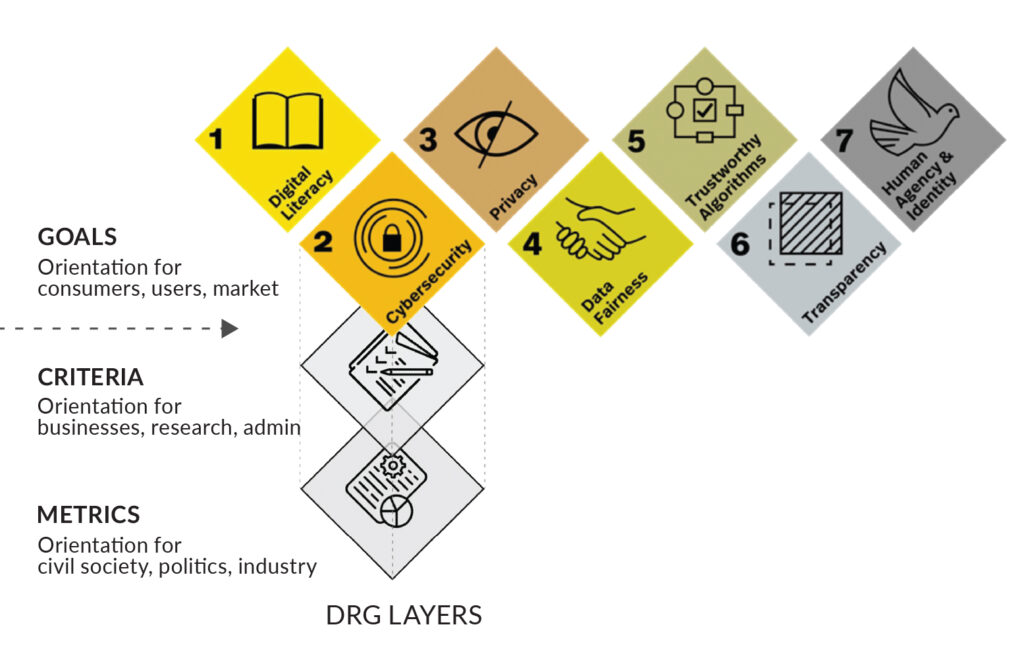

Think of the DRGs as having three layers, each fulfilling a different purpose and serving as orientation for different parts of society:

Goals: The top layer describes the key requirements and intention of the goal. It stands for one of the dimensions of the digital world that needs to be improved to build a more trustworthy ecosystem. On this layer the complexity of the digital world is encapsulated to facilitate the forming of a clear overarching vision.

Criteria: The middle layer contains the underlying guiding criteria for each goal (see table below). Here, businesses and developers find the instructions on how to act, from design to operation of a digital solution, in order to achieve the goal.

Metrics: The bottom layer is filled with metrics enabling us to evaluate to what extent, for example, a specific digital solution complies with the guiding criteria.

DRG GUIDING CRITERIA

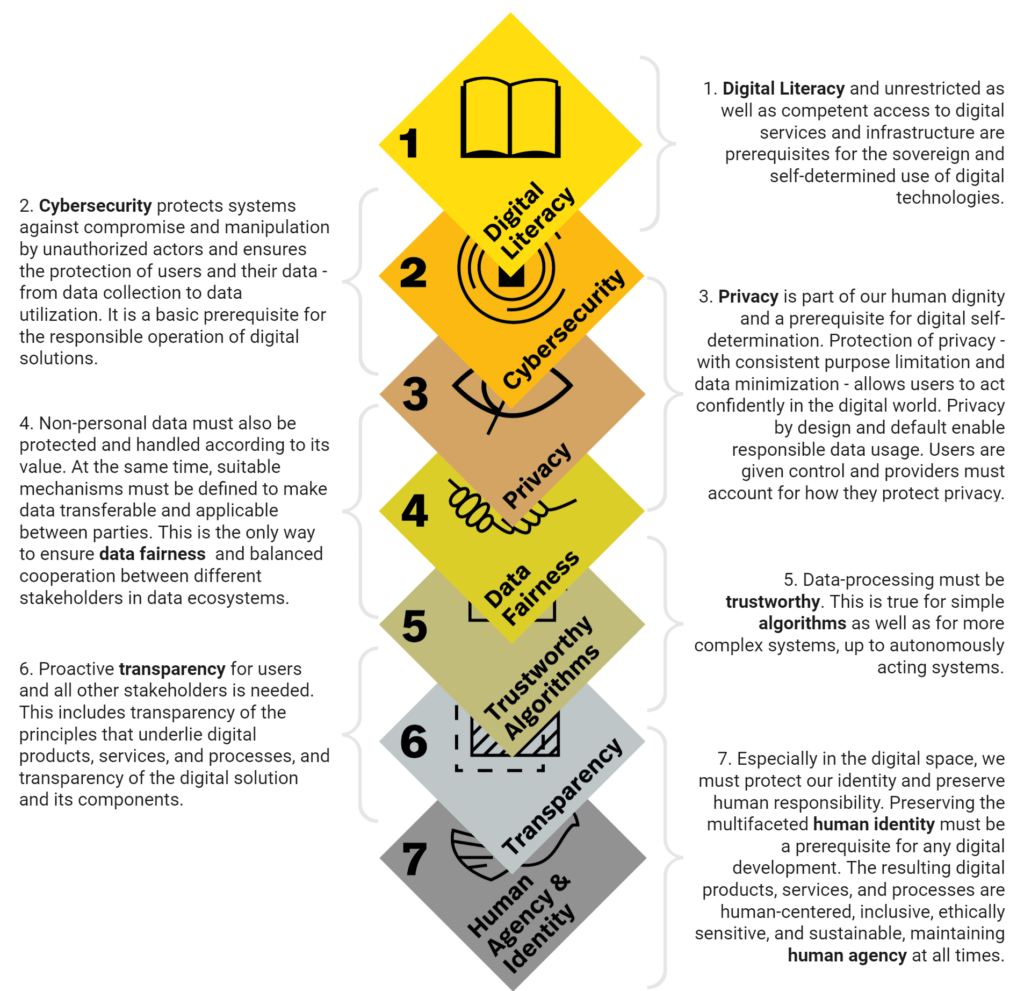

Digital Literacy |  Cybersecurity |  Privacy |  Data Fairness |  Trustworthy Algorithms |  Transparency |  Human Agency and Identity |

Digital Literacy and unrestricted as well as competent access to digital services and infrastructure are prerequisites for the sovereign and self-determined use of digital technologies. | Cybersecurity protects systems against compromise and manipulation by unauthorized actors and ensures the protection of users and their data - from data collection to data utilization. It is a basic prerequisite for the responsible operation of digital solutions. | Privacy is part of our human dignity and a prerequisite for digital self-determination. Protection of privacy - with consistent purpose limitation and data minimization - allows users to act confidently in the digital world. Privacy by design and default enable responsible data usage. Users are given control and providers must account for how they protect privacy. | Non-personal data must also be protected and handled according to its value. At the same time, suitable mechanisms must be defined to make data transferable and applicable between parties. This is the only way to ensure balanced cooperation between different stakeholders in data ecosystems. | Once data has been collected, it must be processed with the aim of trustworthiness. This is true for simple algorithms as well as for more complex systems up to autonomously acting systems. | Proactive transparency for users and all other stakeholders is needed. This includes transparency of the principles that underlie digital products, services, and processes, and transparency of the digital solution and its components. | Especially in the digital space, we must protect our identity and preserve human responsibility. Preserving the multifaceted human identity must be a prerequisite for any digital development. The resulting digital products, services, and processes are human- centered, inclusive, ethically sensitive, and sustainable, maintaining human agency at all times. |

1.1 Information offered for digital products, services, and processes must be designed individually and in a way that is suitable for the target group | 2.1 Developers and providers of digital products, services and processes assume responsibility for cybersecurity. Users also bear a part of the responsibility. | 3.1 Developers and providers of digital products, services, and processes must take responsibility for protecting the privacy of their users. | 4.1 When collecting or re-using data, proactive care is taken to ensure the integrity of the data, considering whether any gaps, inaccuracies or bias might exist. | 5.1 Algorithms, their application, and the datasets they are trained on are designed to provide a maximum of fairness and inclusion. | 6.1 Organizations establish transparency - about digital products, services, and processes as well as the organization, business models, data flows, and technology employed. | 7.1 The preservation of the multifaceted human identity must be the basis for any digital development. Resulting digital technologies are user centric, respect personal autonomy, dignity, and limit commoditization. |

1.2 Access to digital products, services, and processes must be reliable and barrier-free. | 2.2 Developers and providers of digital technology are responsible for appropriate security measures and constantly develop them further. Digital technologies are designed to be resistant to compromise. | 3.2 When dealing with personal data basic principles of data protection are respected, in particular strict purpose limitations and data minimisation. | 4.2 In digital ecosystems the exchange of data between all parties must be clearly described and regulated. The goal must be fair participation in the benefits achieved through the exchange of data. | 5.2 The individual and overall societal impact of algorithms is regularly reviewed and the review documented. Depending on the results, proportional corrective measures must be taken. | 6.2 Transparency is implemented through interactive communication (for example, between providers and users), and mechanisms for interaction are actively offered. | 7.2 Sustainability and climate protection must be part of design choices of digital technologies and digital business models and implemented in practice (especially in accordance with the UN SDGs). |

1.3 Acceptance of digital products, services, and processes must be proactively considered in design and operation. This includes measures on equity, diversity & inclusion. | 2.3 A holistic view and appropriate implementation of cybersecurity are considered along the lifecycle, value chain, and the entire service, resp. solution. | 3.3 Privacy protection is considered throughout the entire lifecycle and should be considered a default setting. | 4.3 Developers and providers of digital technologies must clearly define and communicate the purpose with which they use and process data (including non- personal data). | 5.3 Outputs of algorithmic processing are comprehensible and explainable. Where possible outputs should be reproducible. | 6.3 The application of digital technology is made transparent wherever there is an interaction between people and the digital technology (for example, the use of chatbots). | 7.3 Digital products, services, and processes promote responsible, non- manipulative communication. Where possible, communication takes place unfiltered. |

1.4 Education on the opportunities and risks of the digital transformation is essential - everyone is entitled to education on digital matters. | 2.4 Developers and providers of digital products, services, and processes must account for how they provide security for users and their data - while maintaining trade secrets. | 3.4 Users have control over their personal data and their use - including the rights to access, rectify, erase, data portability, restrict processing and avoid automated decision-making. | 4.4 When providing or creating datasets the “FAIR” data principles are satisfied, especially in cases where re- use would benefit society as a whole. | 5.4 AI systems must be robust and designed to withstand subtle attempts to manipulate data or algorithms. | 6.4 In addition to transparency for users, transparency should also be provided for other stakeholders (e.g., businesses, science, governments) – while maintaining trade secrets. | 7.4 Digital technology always remains under human conception and control - it can be reconfigured throughout its deployment. |

1.5 Awareness for related topics such as sustainability, climate protection, and diversity/inclusion (e.g., along UN SDGs) should be raised, where applicable. | 2.5 Business, politics, authorities, civil society and science must collaboratively shape the objectives and measures of cybersecurity. This requires open and transparent cooperation and disclosure. | 3.5 Providers must account for how they protect users‘ privacy and personal data - while maintaining necessary trade secrets. | 4.5 Users providing or creating data must be equipped with mechanisms to control and withdraw their data - they shall have a say regarding data usage policies. | 5.5 AI systems must be designed and implemented in a way that independent control of their mode of action is possible. | 6.5 Organizations must outline how they will make transparency verifiable and thus hold themselves accountable for their actions in the digital space. | 7.5 Digital technology may only be applied to benefit individuals and humankind and promote the wellbeing of humanity. |